What’s love got to do with it?

OPINION

As it turns out, a lot – enabling hate speech has consequences

It was reported on August 6, 2024 that Elon Musk and his social media platform X (fka Twitter) have sued the World Federation of Advertisers and member companies including Unilever, Mars, and CVS Health, among others. According to X, the lawsuit in part stems from evidence uncovered by the U.S. House Judiciary Committee which X says shows a “group of companies organized a systematic illegal boycott” against the social media platform.

In short, X is frustrated that there are consequences from the actions taken by the organization and its current owner, Musk.

While this plays out in court, let’s do our own analysis from inside the industry as to how we got to the current situation – and what love has to do with it.

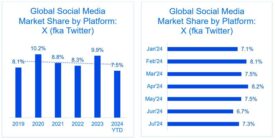

First, if we take a high-level look at how X has been performing over the last few years, we see that while they were averaged just above 10% of the annual global social media market share in 2020, and got close to an average 10% in 2023, so far 2024 has brought the lowest share YTD compared to the last five years. Even April 2024, the month with the highest share so far in 2024 at 8.22%, is still lower than average of the past four years.

Source: Statcounter GlobalStats

Musk bought X (then Twitter) in 2022. Since then, he has made a number of controversial changes, just some of them including: rebranding the company to X, the release of the “Twitter Files” (a series of releases of select internal Twitter documents), suspension of journalists’ accounts, temporary measures like labeling media outlets as “state-affiliated and restricting their visibility, and challenges such as viral misinformation and hate speech.

While individuals at companies may have varying opinions about some of those decisions, it’s the latter ones that impact advertisers – they want brand safety, to avoid content or contexts that are harmful to a brand’s reputation. In 2022, X was at the center of brand safety conversations when ads mistakenly ran alongside content related to child sexual abuse.

X is not the sole platform challenged with managing brand safety. In fact, Integral Ad Science’s The 2024 Industry Pulse Report found that brand safety (“ads delivering alongside risky content or fake news”) was tied with “maximizing yield” as the number one challenge facing US publishers.1 The difference in X’s case is that it has in Musk an owner who is focused on combatting advertisers instead of addressing the challenge at hand.

Also at issue is a concept that’s similar to brand safety but slightly different, brand suitability – “avoiding content or context that is not aligned with a brand’s values, voice, or audience.” As more companies understand the need for and support a more diverse and inclusive world, this concept becomes especially important. And that’s where love comes into play. Musk and X’s changes to the platform have resulting in increased hate speech and a reduction in safe spaces for those in marginalized communities.

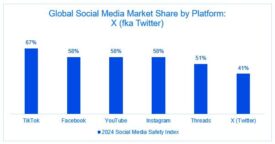

Within the LGBTQIA+ community, for instance, GLAAD’s 2024 Social Media Safety Index ranks X the lowest among six major social media platforms, 41%, compared to TikTok’s high score of 67% (technically, still only earning a “D+”; all other social media platforms including X scored an “F”).

Advertisers now weigh a multitude of factors in determining where best their message will reach the intended audience in the right context.

Which leads us back to the WFA, a global association that represents over 150 of the world’s biggest brands and more than 60 national advertiser associations worldwide, and approximately 90% of global marketing communications spend (roughly US $900 billion per year). In 2019, WFA established the Global Alliance for Responsible Media to “help the industry address the challenge of illegal or harmful content on digital media platforms and its monetization via advertising.”

And while Musk claims this group “illegally” harmed X’s business, GARM’s site specifically states (bolding for emphasis):

GARM’s core focus is to provide tools to help avoid the inadvertent advertising support of demonstrable online and offline harm.

GARM is not involved in any decision-making steps relative to the measurement of content to determine level of risk or any tasks related to the categorization of content, sites or creators. Instead, individual companies may rely on third-party ratings services to deliver on these objectives.

Crucially, GARM is not involved in the decisions relating to the allocation of budgets. GARM does not interfere with a member’s decision as to whether or not to invest advertising resources on a particular website or channel. Instead, GARM focuses on creating a voluntary framework that can be used by individual advertisers to better utilize advertising resources in accordance with their own goals and values.

Based on this, it seems clear that Musk and X are committed to digging themselves into a deeper hole with advertisers, rather than make a conscious effort to work together towards a better, more love-filled digital future.