Telegram CEO arrest spurs debate over criminal liability for harmful online content

French authorities on Wednesday charged Telegram CEO Pavel Durov with a number of crimes related to illegal activity on the app.

The six charges include “complicity” in the “gang distribution of images of minors presenting child pornography” and “drug trafficking”.

Telegram is also accused of failing to respond to judicial requests and cooperating with authorities when required by law to do so.

In the immediate term, Durov has avoided jail as his case goes to trial through posting a €5m bail. He will not be allowed to leave France and must check in with a police station twice per week.

Durov was detained in Paris last weekend as part of a cyber-criminality probe that began in February.

What is Telegram?

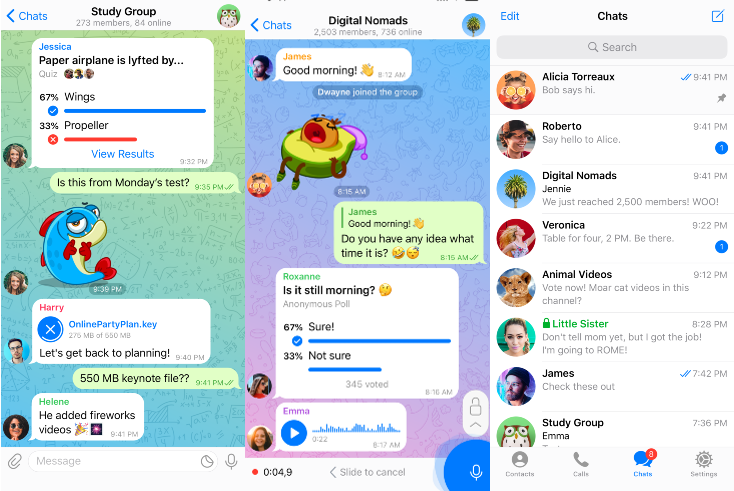

Telegram is a social media platform that offers voice and text messaging and allows users to post stories and create public groups of up to 200,000 people.

It is especially popular in backsliding democracies and autocracies due to its liberal approach to content moderation and purported care towards user privacy — although critics have warned that its encryption standards are not as robust as claimed.

Countries where the app is especially prominent include Russia and other post-Soviet nations, as well as India and Iran.

In an interview with the Financial Times in March, Durov claimed that Telegram had around 900m global monthly active users and was nearing profitability.

According to a separate report by the Financial Times, Telegram is suspected of understating the number of users in the EU in order to stay under a threshold, above which it would otherwise be subject to additional regulation.

While Durov has eschewed aggressively monetising the platform in favour of independently funding the project, it does offer brands the ability to send sponsored messages in public one-to-many channels with over 1,000 subscribers. However, Telegram says it does not collect or analyse user data in support of such messaging.

The platform was founded by Durov and his brother Nikolai in 2013 to help resist Russian government pressure against freedom of expression. The duo had previously founded a separate popular Russian social media service, Vkontakte, before being pushed out in 2014. Durov left Russia and has since run Telegram via its headquarters in Dubai as well as on various servers all around the world. He holds citizenship in Russia, Saint Kitts and Nevis, the United Arab Emirates and France.

Durov is a somewhat eccentric character — he has posted on social media about donating sperm to “open source” his DNA, claiming he has fathered over 100 children. He regularly posts shirtless photos of himself as a way to mock Russian president Vladimir Putin and, in one 2012 stunt, tossed 5,000-rouble notes out of his office window in Saint Petersburg, leading to a brawl on the streets.

Analysis: Defining the line for criminal liability on social media

The move to detain and ultimately charge Durov is considered extraordinary, since while social media CEOs have often been obliged to appear before government bodies to discuss the safety of their platforms, never before has a CEO been actually arrested for suspected criminal liability over what occurs on their platform.

Durov’s arrest has thus reignited the debate over whether, and to what degree, governments should clamp down on social platforms that fail to comply with efforts to tackle harmful content.

It is true that Durov has taken a laissez-faire approach to moderating his platform, which has naturally attracted terrorists, extremists, gun runners, scammers and drug dealers, among others.

When asked by anti-child-exploitation advocacy groups to address the spread of child abuse content on the platform, Telegram reportedly ignored their concerns. This is distinct from other social platforms, which have made public commitments to tackling harmful content, even if their capacity to execute on those commitments has often been lacking.

‘Blood on your hands’: 5 takeaways from US social media hearing

John Shehan, senior vice-president of the US National Center for Missing & Exploited Children’s Exploited Children Division, told NBC News that Telegram “is truly in a league of their own as far as their lack of content moderation or even interest in preventing child sexual exploitation activity on their platform”.

A spokesperson for Telegram has argued that its content moderation is “within industry standards and constantly improving” and that it is “absurd to claim that a platform or its owner is responsible for abuse of that platform”.

Various far-right extremists have also come to Telegram’s defence, including ex-Fox News presenter Tucker Carlson, white nationalist Nick Fuentes and X owner Elon Musk.

‘Free speech’ debate and government pressure

Musk has perhaps been the loudest figure arguing for the importance of “free speech” in online spaces, labelling himself a “free speech absolutist” and dismantling Twitter’s content moderation team following his purchase of the platform in 2022. However, critics have pointed out that since buying the company, accounts of journalists and activist groups that he disagrees with, including pro-Kamala Harris account White Dudes for Harris, have been locked or throttled.

The arrest of Durov raises the question of if and when it becomes appropriate to hold the proprietor of a platform criminally accountable for the speech and actions of its users. In the case of Durov, the suggestion is that had Telegram been at least willing to cooperate with the authorities and make an effort to moderate harmful content, his arrest may well have been avoided.

In any event, prosecutors have collected a wealth of evidence to suggest a large amount of unchecked criminal behaviour conducted via Telegram. The suggestion that Durov be held responsible for negligence could make for a compelling legal argument.

It is also true, however, that governments may attack social media companies for other reasons. In the US, Republicans have sought to vilify social media companies — in particular Meta and TikTok — for restricting speech on their platforms, such as through suspending accounts when they are identified as spreading misinformation related to elections or public health.

‘Weaponised’ litigation: Industry stands ground against X following GARM shutdown

Meta CEO Mark Zuckerberg has also been personally attacked by Republicans for donating more than $400m to help facilitate safe voting during the 2020 presidential election. Republicans, who have been attempting numerous voter-suppression tactics for years, suggested Zuckerberg made the donation to aid Joe Biden’s campaign.

In response to Republican pressure earlier this week, Zuckerberg conceded that Meta was pressured in 2021 by the Biden administration to moderate and censor posts, including memes and satire, related to the Covid-19 pandemic. He expressed regret about doing so and suggested Meta would react differently today. Zuckerberg also said he would not be donating again to support safe voting efforts.

Business Insider media and tech correspondent Peter Kafka noted that, in response to government pressure and the changing consensus around the “free speech” debate, social media companies have become wary of over-moderating content.

“I think the pendulum in Silicon Valley is sort of moving back away from ‘let’s moderate a lot’ to ‘maybe let’s moderate less’,” Kafka said on ABC News’ Start Here podcast.

“But, to be clear, if you run one of these big internet companies, you have to moderate stuff,” he continued. “You will be under tremendous legal liability if you don’t. If you don’t want to moderate anything, don’t run a big platform. And that’s going to be the issue with Telegram and France.”

What is the right balance between freedom of speech and expression on privately owned social platforms versus the possibility for harm? Social media has greatly democratised the information ecosystem. That means more information, but also more disinformation, misinformation, hate speech, ease of access to child abuse content and other predatory behaviour. Will consumers and governments accept that trade-off? Or perhaps a different approach is needed.

For advertisers, it’s clear that they don’t want to be associated with those harms. Hence brands have expressed little interest in spending on X in particular, as it has moved away from robustly moderating harmful content.